Everyday when you come into work, you’re bombarded with a constant stream of problems. From service desk calls to network performance monitoring, you’re busy from the moment you login until the moment you click the “shut down” option on your device. Even more frustrating, your IT environment consists of an ever-expanding set of network segments, applications, devices, users, and databases across on-premises and cloud locations.

Observability is important to IT operations because it allows you to collect, aggregate, and correlate real-time data so that you can analyze what’s happening in your environment for better overall service outcomes.

What is observability?

Observability is the process of collecting data from endpoints and services to measure a system’s current state. In cloud environments, observability comes from aggregating and correlating logs, metrics, and traces from:

- Hardware

- Software

- Cloud infrastructure components, like containers

- Open-source tool

- Microservices

Observability enables detection and analysis of an event’s impact on:

- Operations

- Software development lifecycles (SDLC)

- Application security

- End-user experience

What is the difference between observability and monitoring?

Many people use the terms observability and monitoring interchangeably. While related and often complementary, the two words have important distinctions.

Observability is an objective state, a visibility based on collected data, that allows you to gain insights. When a system is observable, you have health and performance data.

Monitoring is a proactive activity that you engage in using observability. Without observability, you can’t effectively monitor systems.

Why is observability important?

In distributed, interconnected IT systems, you have many components interacting with one another. When one component experiences a failure or problem, it can impact a seemingly unrelated system element.

With constantly changing cloud environments, interconnected applications, networks, and devices means that a single change can have a wider reaching impact and these happen continuously. With observability, you can see an issue, like a slow network or device misconfiguration, and understand its potential impact on other components. While monitoring can tell you when something expected happens unexpectedly, observability helps you see the unexpected when it occurs unexpectedly.

Additionally, observability gives you a way to improve customers’ digital experiences. If you offer digital services, you can use the collected data to track conversions and usage so that your organization can make informed business decisions that improve user experiences.

Benefits of observability

Today, technology is embedded into every company’s business model. With observability, different teams can collaborate more effectively because they all share the same data.

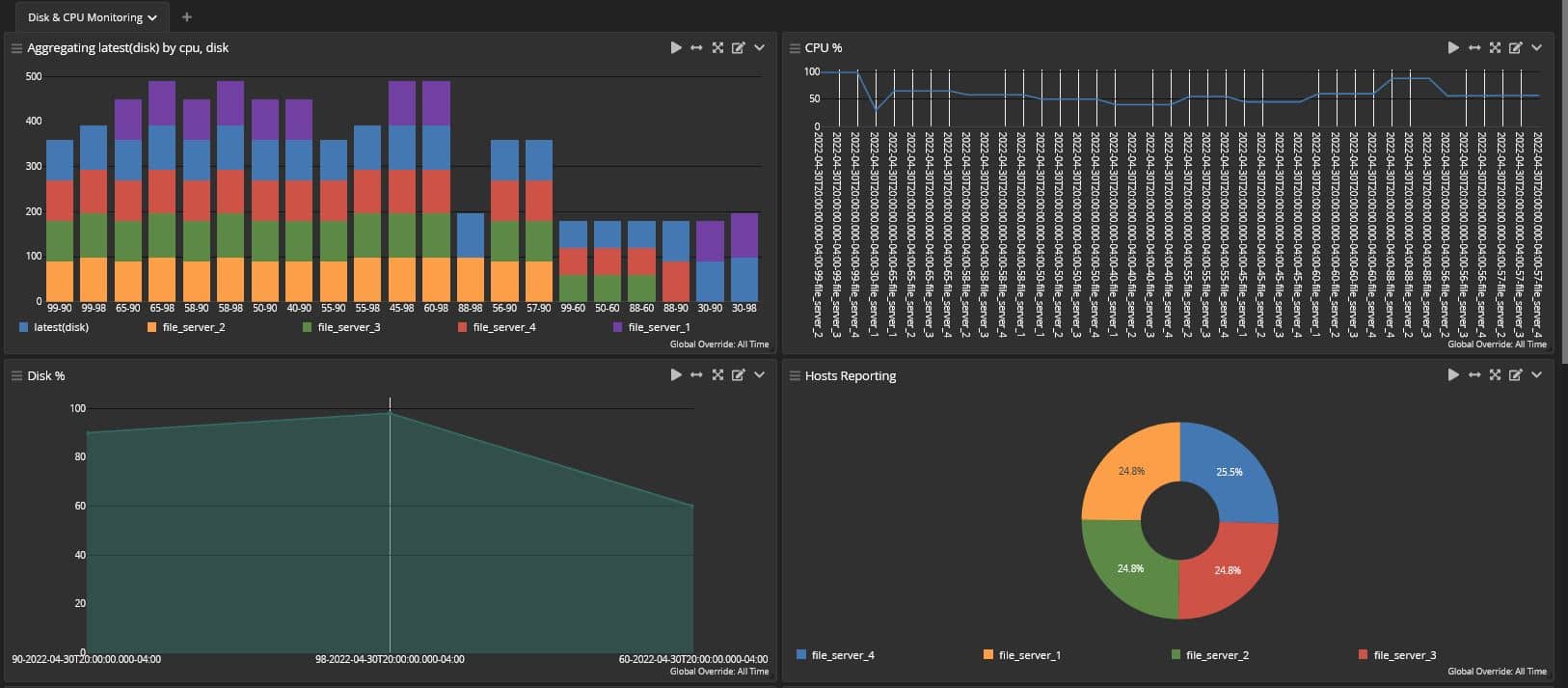

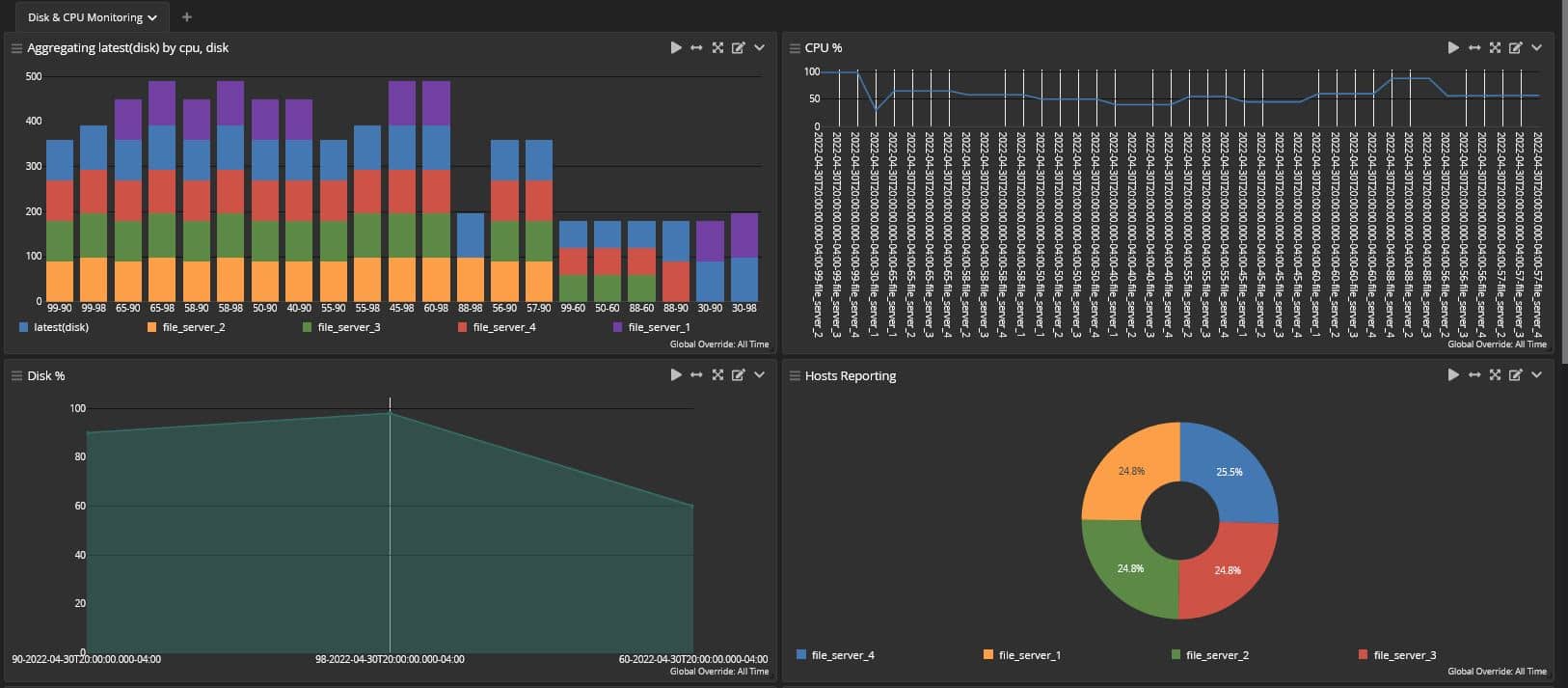

Infrastructure monitoring

When you have observability, your infrastructure and operations (I&O) team can use that context to improve performance, including:

- Reduced mean time to identify and remediate issues

- Cloud latency issue detection

- Resource utilization optimization

Application performance

With observability, you can more rapidly identify issues across cloud-native and microservices architectures. Additionally, IT ops and DevOps teams can collaborate more effectively

Application security

When developers build applications designed to be observed, DevSecOps teams can use the insights gained to automate testing and CI/CD processes.

End-user experience

With observability, you can monitor applications and environments to proactively detect and resolve issues. If you fix a problem before the end-user can report it, you increase satisfaction and reduce service desk calls.

Understanding the “Three Pillars of Observability”

You need the log, metric, and trace data classes to achieve observability. However, you need to aggregate and correlate the “three pillars of observability” because if they remain siloed you won’t be able to gain insight into problems that occur or why they happen.

Logs

Every activity that happens in your environment generates an event log with details that include:

- Time stamp

- Event type

- Machine or user ID

Whether structured or unstructured, written in plaintext or binary, this data includes text and metadata that you can use for queries, making it critical to observability and monitoring.

Metrics

Metrics are structured data providing quantitative values that you can use to track trends over time. Typically, you use metrics for:

- Key performance indicators (KPIs)

- CPU capacity insights

- Memory monitoring

- System health and performance assessments

Traces

A trace documents how a request moves across a distributed system, giving you a way to see how a user request flows from the original interface until the user receives confirmation of receipt. A trace provides a “span,” encoded data about every operation performed on a request and includes information related to each microservice performing an operation.

Traces can include more than one span, creating observability that helps identify bottlenecks or breakdowns.

Centralized Log Management for Observability

A purpose-built centralized log management solution gives you the necessary observability, the collaboration capabilities needed to make observability useful, and the analytics that enable proactive monitoring.

Create a Single Source of Truth

Observability requires you to aggregate and correlate log data, metrics, and traces in a single location so that you have a bird’s eye view of everything happening in your environment. Manual monitoring across multiple vendor-supplied tools leaves your log data, metrics, and traces siloed. Observability requires a single location for all visibility so that you can see the dependencies across devices, users, databases whether they’re on-premises or in the cloud.

This becomes even more important when you have multiple teams using the data. For example, when DevOps and IT Ops have the same visibility, they can work together to fix a service issue. A purpose-built centralized log management solution enables observability and collaboration.

Make Observability Actionable and Scalable

Observability is something you need to have, but it’s also something you need to be able to use. If all you can do is see that a problem exists, you’re not gaining observability’s full benefit. One benefit of a centralized log management solution is that by aggregating, correlating, and analyzing log data, metrics, and traces you can create a custom set of rules that trigger responses. This prevents a minor issue from being more severe.

Further, a cloud-native centralized log management solution is scalable because you can pay as you go to save money. Many companies store their log data in containers for scalability. Unfortunately, if the container is destroyed, so are all those files. A cloud-native centralized log management solution gives you the best of both worlds, especially when you need access to historical information quickly when trying to investigate an issue.

Improve operational effectiveness and efficiency

Observability enables collaboration only if you can create effective, efficient operational workflows. Alone, observability does nothing. Observability exists to serve a purpose, like monitoring or incident investigations.

A centralized log management solution integrates with business tools, like email, Slack, or ticketing platforms. With these integrations, you can build the automations that improve operational processes to detect, investigate, and resolve issues faster.

Graylog Operations: Actionable, Integratable, Cloud-Native Observability

Maintaining maximum uptime and peak performance requires observability. Graylog Operations provides a cost-efficient solution for IT ops so that organizations can implement robust infrastructure monitoring while staying within budget. With our solution, IT ops can analyze historical data regularly to identify potential slowdown or system failures while creating alerts that help anticipate issues. By sending alerts via text message, email, Slack, or Jira ticket, you reduce response time and efficiently collaborate with others.

You can use Graylog Operations to leverage observability for managing complex, distributed environments because it offers the flexibility and scalability IT ops needs.