The phone rings. Your email pings. Your marketing team just told you about a flood of messages on social media and through live chat that there’s a service outage. You thought your Monday morning would be calm and relaxed since people are just returning from the weekend.

How do you start researching all of these incoming tickets? How do you know which ones to handle first? Is this just a hardware failure, or are you about to embark on a security incident investigation like Log4j?

Right now, you’re wishing that you’d been scanning through your log data as part of your daily workflow. You just didn’t have the time or technology that would give you the ability to detect these problems in advance. After all, you’ve got a bunch of different tools, and the data you need is spread across all of them.

This is why normalizing log data in a centralized logging setup is important, whether you want to be proactive or need to be reactive quickly.

Data Collection: The First Step To Normalizing Data

You can’t answer questions – your own or those of your customers – without data. The problem is that you have so much of it coming in from your various users, devices, systems, and networks.

Data collection can sometimes feel like starting your car while still having the safety brake on. The minute you put your foot on the gas, you realize you’re not moving as fast as you should. Even worse, if you keep accelerating, you might cause major damage.

You want to bring in all the data your on-premises and cloud assets generate, but you don’t have a way to do this efficiently. It might not matter anyway if you can’t correlate the information because every technology has a different format.

In short, you have the data. You don’t have the right tools to help you add “review” as part of your daily routine. You also don’t have a way to do searches – proactively or reactively – fast.

You feel like you need an extra set of hands to help you decide whether this is an operational or security incident. This is where centralized log management with prestructured data can help.

Pre-Structured Data: Parsing Event Log Data on the Front End

You want a log management solution because you need to get answers. Either you need to try to prevent service outages or detect security risks. Either way, you can’t do this efficiently if you’re not about to include reviewing log data as part of your daily work.

On the other hand, event log data is intended for machines, making reading event logs difficult. Log aggregation with normalized data makes log analysis easier and faster.

If you want to run effective, efficient searches, you must prepare your data beforehand. This will make both regular and emergency reviews faster because parsing your data during the search process makes them time-consuming and cost-inefficient. Parsing data upfront gets faster search times because you’re running queries against data that’s already been organized in a way that makes sense.

Normalizing event log files on the front end means that almost everyone can access, search, and analyze event log data in a reliable, repeatable way. High volumes of logs are easier to manage when you have all the information in one place, all in the same format.

If you want to make your senior leadership giddy? You can tell them that structuring data on the front-end means you can store it more efficiently and cost-effectively as well.

Normalized Log Data for Lightning Fast Root Cause Analysis

Those service tickets are coming in. They’re coming in quickly, and there’s a lot of them. People are telling you that the network is slow. They’re frustrated, especially in a remote work environment.

When you’re strapped for time, you don’t want to be writing queries. You want to be responding to tickets and resolving issues in real-time. The sooner you can locate the root cause of the problem, the easier your life is.

If it’s not just a hardware failure, you’re going to need to be even faster. The longer it takes you to find the root cause of a potential DDoS attack, the more likely it is that a cybercriminal will be successful in trying to steal your data.

For example, under the 1-10-60 Framework, you should be able to detect risky activity within a minute, investigate the alert within 10 minutes, and remediate the environment within 60 minutes. To do this, you need lightning-fast search capabilities.

You need your data parsed to correlate event data across your environment to get the speed you need.

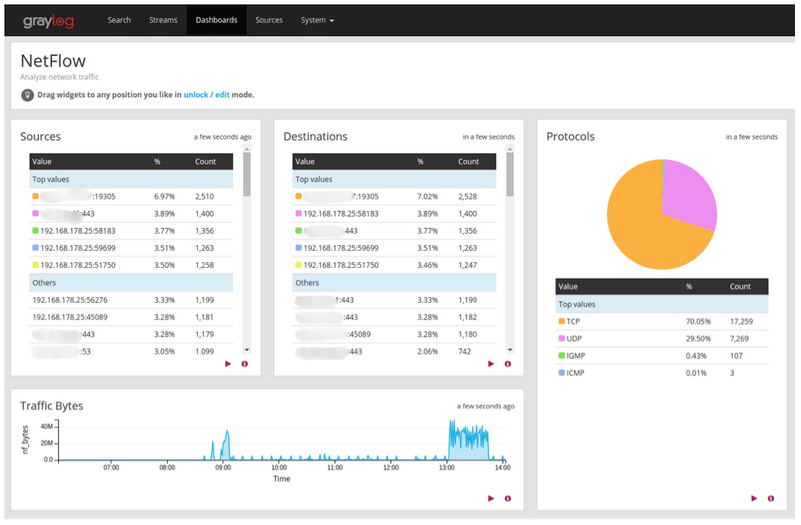

Example: Network Traffic Analysis

How do you get the visibility you need? Using a log management solution gives you the answers to help you quickly determine whether this is a performance bottleneck or a DDoS attack.

With log management done right, the process looks like this:

- View of how IP addresses connect

- Click on one to see its metadata, including:

-> Protocol usedN

-> Number of packets exchanged

-> Underlying infrastructure data

-> Whether connection was accepted, rejected, or blocked

With all the data parsed in advance, you don’t need to input the values as part of the query, and your queries are returned faster. If you want to see all the messages where the field source is set to a specific value, such as a unique IP address, you create a simple query “source address equals.”

In Graylog, you don’t need to include all the different values of information you want to be returned in the query because we extract that data as part of the parsing process.

Since Graylog does the parsing up front, you simply click on a field to start an investigation. Then, the metadata shows up as a clickable link, meaning that you don’t need to write another long query, and the query doesn’t need to tell Graylog what field to search.

Whether you’re using this for proactive or reactive research, it makes the process easier. This can reduce the time you’re spending responding to service tickets or help you more rapidly detect a security issue.

Graylog was purposely designed with the use cases in mind so that queries could be short and easy to use. Built with ease-of-use in mind, Graylog enables analyses without requiring users to build queries that incorporate the data fields they need.

Continuous Monitoring with Workflows and Parameters

Parsed event log data gives you a way to run continuous searches for better operational and security outcomes.

If you’re running manual queries against raw data, your searches will take a lot of time that you don’t have. Whether you’re trying to proactively solve operations issues or engage in threat hunting for security, you need to start looking for what you don’t know exists in your environment.

However, starting every day by spending an hour – or two or even three – running these searches isn’t an efficient way to spend your time.

Proactive Problem and Cybersecurity Risk Monitoring

When you parse data on the front end, you can build searches out in advance and schedule them to run regularly.

By building the query in advance and scheduling it to run daily, you can automatically get the insights you need.

With an intuitive centralized log management solution, you can view and find new research avenues at the click of a button to continually enhance operations and security.

Your team doesn’t always need dashboards when they can follow their curiosity. With Graylog, your IT teams can simply click on a value or click on a type of visualization. If they want to see data as a bar chart, they can. If they want to transform that data to a pie chart, Graylog supports a one-click process for that as well.

If they want to save the query as a dashboard to monitor the situation further, they can also do that in Graylog. The more your teams can use event log data effectively and efficiently, the better your operations and security programs are.

By building and scheduling search queries in advance, you can automate daily threat hunting activities that help you move toward a proactive security strategy aligned with the 1-10-60 framework.

Use Case: Monitoring an IP Address

Monitoring IP addresses is fundamental to network performance and network security.

From a network performance perspective, it can show important data about a destination IP like:

- Latency

- Jitter

- Packet loss

- Throughput

- Packet duplication

- Packet reordering

From a security perspective, it can show important metadata about a destination IP like:

- Active inbound and outbound connections

- Associated events

- DNS queries

- Unusual activity

In other words, your centralized log management solution gives you a “twofer,” acting as two tools in one.

Using Graylog to Build Out Efficient Workflows with Normalized Data

Being prepared isn’t just the Boy Scouts’ motto. It’s a good way to approach security as well. Building out your queries and defining the data you want from your logs before you need to do an investigation saves time and money.

Maximum Flexibility with Customized Rules

Prepping your data is like doing weekly meal prep, where you do all the work on Sunday to eliminate work later in the week when you’re busy.

Knowing how you want to parse and use your data at ingestion might mean putting in extra work in the beginning. However, it makes everything else easier later on.

Defining the fields in your messages allows you to do your searches later.

Graylog’s log management done right solution uses limited coding with “if-then” statements so that you can easily define how your messages come in. This defines the fields in your messages. Graylog ingests these fields then applies them to the rest of the functionalities.

Planning well at this point, and taking the extra time to do it right, will make everything else easier.

Power of Simplicity

Once you parse how the data is delivered, you never have to do it again. Graylog was built around a fundamental belief that once you complete this work, you should have super simple, super powerful query capability without defining these rules again.

If you think you need to make changes as you expand your use cases, Graylog is flexible enough to make changes. Any changes apply to all future data written, and Graylog will write it according to these new rules. While you can’t change the past, you can adapt and evolve your messages for the future.

Focus on the Data, Not the Use Case

Many companies struggle to define parsing rules because they start with the use cases that the data will serve.

However, the goal should be defining the fields to make the data meaningful. You gain greater flexibility when you parse the fields based on the information you need to know instead of how you think you might use the data.

For example, Graylog has a catalog that defines a multitude of data fields that you can choose to extract from your logs. For example, once you set up your parsing rules, you can:

- Enrich data

- Use lookup tables

- Route data differently

- Encrypt or hash sensitive data contained in the logs

- Run regular expressions

In other words, look for the data types you want, extract that information, then use the flexibility built into Graylog’s centralized log management platform.

Graylog: Normalized Log Data for Operations and Security

Graylog Operations customers can use the Graylog Illuminate spotlights, which provide out-of-the-box, pre-configured rulesets. If you want to get up and running with your Graylog instance faster, then you can start with the pre-configured parsing rules for flexible data that you can use with simple queries. Additionally, Illuminate can act as a starting point before you decide to incorporate customizations.

With Graylog, you don’t have to choose between a tool for managing service desk tickets or a security tool. Graylog Operations gives you the extra set of hands you need to manage both – and with lightning-fast speed so that you can meet or exceed your key performance indicators.