If you work with large amounts of log data, you know how challenging it can be to analyze that data and extract meaningful insights. One way to make log analysis easier is to normalize your log messages. In this post, we’ll explain why log message normalization is important and how to do it in Graylog.

Why is log message normalization important?

When log messages are generated by different systems or applications, they often have different formats and structures. For example, one application might log the date and time in a different format than another application, or it might use different names for the same field.

This can make it difficult to analyze log data and extract insights. Normalizing log messages involves transforming them into a consistent format that makes it easier to analyze and compare data across different systems and applications.

By normalizing log messages, you can:

- Improve searchability: When log messages are normalized, it’s easier to search for specific fields or values across different log sources.

- Increase efficiency: Normalized log messages are easier to parse and analyze, which can save time and resources.

- Reduce errors: When log messages are normalized, it’s less likely that errors will occur during log analysis due to inconsistencies in data formats.

How to normalize log messages in Graylog

Step 1: Define the log message format

The first step in normalizing log messages is to define the format of the log messages you want to normalize. This will involve identifying the fields in the log messages that are relevant for your use case.

For example, you might want to extract:

- date and time

- log level

- source IP address

- message text from each log message

The Graylog team has defined a format, or schema, for log messages called the Graylog Information Model – https://schema.graylog.org/en/stable/ . At Graylog, we would recommend using this schema for your logs as all of the Dashboards, Event Definition and other content we provide under the Illuminate umbrella conforms to this model.

Optional – Step 2: Create a Grok pattern

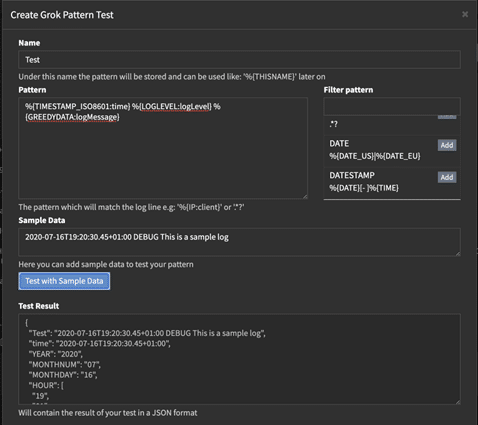

Once you have defined the log message format, if the logs are currently not parsed, the next step is to create a Grok pattern. Grok is a powerful tool for parsing unstructured log data into structured data that can be easily analyzed. To create a Grok pattern, you will need to use regular expressions to extract the relevant data fields from your log messages.

Graylog provides a Grok Debugger that you can use to test your Grok patterns. This can help you ensure that your patterns are accurate and that they correctly extract the relevant data fields from your log messages.

Step 3: Create a message processing pipeline

The next step is to create a message processing pipeline. A message processing pipeline is a set of rules that defines how your log messages are processed.

To create a pipeline in Graylog, go to:

- System -> Pipelines and click on “Create pipeline”

- Give your pipeline a name and a description

- define the rules that will be applied to your log messages.

Field extraction with Grok

If your messages are not parsed at all, you will want to create a rule that uses your Grok pattern to extract the relevant data fields from your log messages. The pipeline rule function grok should be used here:

grok(pattern: string, value: string, [only_named_captures: boolean])Renaming existing fields

If your messages are already being parsed by another mechanism, you can rename the fields to conform to your newly defined log schema. Graylog provides an easy to use pipeline function to perform this action:

rename_field(old_field: string, new_field: string, [message: Message])Step 4: Verify your results

After you have applied your pipeline to your streams, you should start seeing normalized log messages in Graylog. To verify that your log messages are being normalized correctly, you can use Graylog’s search functionality to search for specific fields in your log messages.

Conclusion

Log message normalization is an essential step in analyzing large amounts of log data. Normalizing log messages involves transforming them into a consistent format, making it easier to analyze and compare data across different systems and applications. Graylog provides a set of tools for normalizing log messages, including defining log message formats, creating Grok patterns, and building message processing pipelines. By normalizing log messages, organizations can improve searchability, increase efficiency, and reduce errors during log analysis. By following the steps outlined in this article, organizations can better manage their log data and extract meaningful insights from it.