Many IT professionals think of Graylog primarily as a security (SIEM) solution, and of course it can be used in that way to great effect.

However, Graylog’s industry-leading log aggregation, search, visualization, and classification capabilities go far beyond that role alone. Collectively, they give organizations extraordinary power to track and oversee practically any technical domain, any combination of them, and to a significant degree, the business ramifications of the technical infrastructure.

These capabilities also mean Graylog is an extremely powerful way to enhance, expand, and secure not just current technologies, but emerging and future technologies. Whatever that tech may do, Graylog will be able to expand its reach in new ways, and create new kinds of value.

Want an example? Consider artificial intelligence (AI). Few emerging tech categories have gotten more attention than AI in recent years, and for good reason.

If implemented properly, AI can sift through vast data repositories, find hidden patterns, and generate insights capable of taking a business from good to great and from great to world-class.

But the phrase “if implemented properly” also illustrates the challenge with that premise. AI is complex, and inexperienced teams — unfamiliar with those complexities — often get underwhelming results at first.

That’s why a successful AI engagement typically requires at least two things:

(1) Top-tier expertise: data scientists and software engineers who focus on AI

(2) Data — an enormous amount of it, and of the right type

It’s in addressing the second requirement that Graylog can play a key role. This is particularly important because one of the hardest, slowest, and most operationally expensive obstacles standing in the way of successful AI is the process of aggregating the right initial data — including critically important log data.

Let’s consider some of the ways Graylog can help organizations overcome that obstacle.

AI, LIKE AN ANALYST, MUST BE TRAINED — BUT TRAINING IT REQUIRES A LARGE VOLUME OF KEY DATA, SUCH AS LOG DATA

Nearly all successful AI implementations in business today have a relatively narrow focus; they do not attempt to apply across contexts (such as security, fraud detection, and natural language processing), but only inside a given context, for a given purpose.

Business AI also typically relies on anomaly detection as its primary governing principle. The idea is to establish a normal baseline of operating conditions and then look for anomalies that extend beyond that baseline in one or more dimensions. When anomalies are detected, they are assessed to determine their nature and probable ramifications.

The most familiar instance is probably in security. AI-based endpoint protection solutions usually work by assessing the normal operating context of endpoints, specifically with respect to the integrity of key files like scripts, executables, and dynamic libraries and the circumstances under which those files should or should not change.

Under some circumstances — for instance, a system update or security patch or security process updated manually by an administrator — changes to those files are normal and desirable. Under other circumstances, that is much less likely to be the case.

The fundamental job of the AI is to detect and assess such changes, weigh them against the complete context of relevant operating conditions, evaluate the odds of malware or a live breach by a hacker, and then if necessary, take action based on that evaluation. That action might take various forms: notifying security administrators by generating an alert, interoperating with other security solutions, flagging events as possible compliance violations, and others.

Because security administrators tend (like all IT professionals) to be time-challenged, it will not help to inundate them with a tsunami of false positives. AI cybersecurity, therefore, succeeds when it generates the fewest possible number of false positives and yet correctly detects malicious activity — even in the case of zero-day attacks for which there is no signature to recognize, nor any current security patch or procedural response available from OS and app vendors.

If all this sounds rather similar to the behavior of a human security expert, that’s no coincidence — it’s actually the reason why these solutions are called AI in the first place. They are meant to play much the same role as a dedicated security administrator except, of course, they are infinitely replicable and dramatically faster in performing their work.

But just as human administrators require expertise, typically acquired via a process of years on the job, so too do AI solutions require expertise, typically acquired via pattern recognition algorithms that are repeatedly run against suitable training data.

Very large volumes of such data are necessary — especially in cases where it isn’t conveniently classified in advance, and the AI is responsible for that crucial task. Where does that training data come from?

Many organizations find that an awkward question to answer. The data must be accurate — representative of normal conditions — and therefore as cleaned and normalized as possible before the AI can be trained using it. If the data is insufficient, or inaccurately reflects operating norms, the AI will be trained poorly, and will do a poor job once implemented in production systems.

GRAYLOG’S EXCEPTIONALLY FAST AND COMPREHENSIVE LOG INGESTION, SEARCHING, AND ARCHIVING CAPABILITIES DRIVE AI SUCCESS

This challenge — aggregating suitable data and delivering it to data scientists for training purposes — illustrates a key role Graylog can play in helping businesses create and perfect AI for their particular purposes.

Unlike some competing solutions, Graylog’s ingestion capabilities can support data volumes of virtually any size and they are not confined to a particular class of assets or a particular business or technical domain. Any asset that generates logs is treated by Graylog as a resource to leverage. That includes servers, of course, but also commercial apps, custom apps, firewalls, network assets such as switches and routers, core business databases, IT management solutions, and even (in many cases) operational assets like HVAC systems, pumps, and turbines.

Graylog also doesn’t require special connectors to connect to such assets and their logs, as some alternatives do. This means not only that Graylog can be up and running in a matter of days (or if the Graylog appliance is used, hours), but that it will then draw from the complete range of available log data. Any necessary subset of that log data can therefore be aggregated and applied to train AI solutions.

Graylog is also available in different versions depending on the organization’s infrastructure needs and context — both an on-premises version and a cloud version. Wherever in the logical infrastructure logs are generated, Graylog can ingest them.

Furthermore, it can do so with extraordinary speed. Competitive solutions, as a rule, cannot match Graylog’s ingestion velocity, particularly in the case of on-premises solutions.

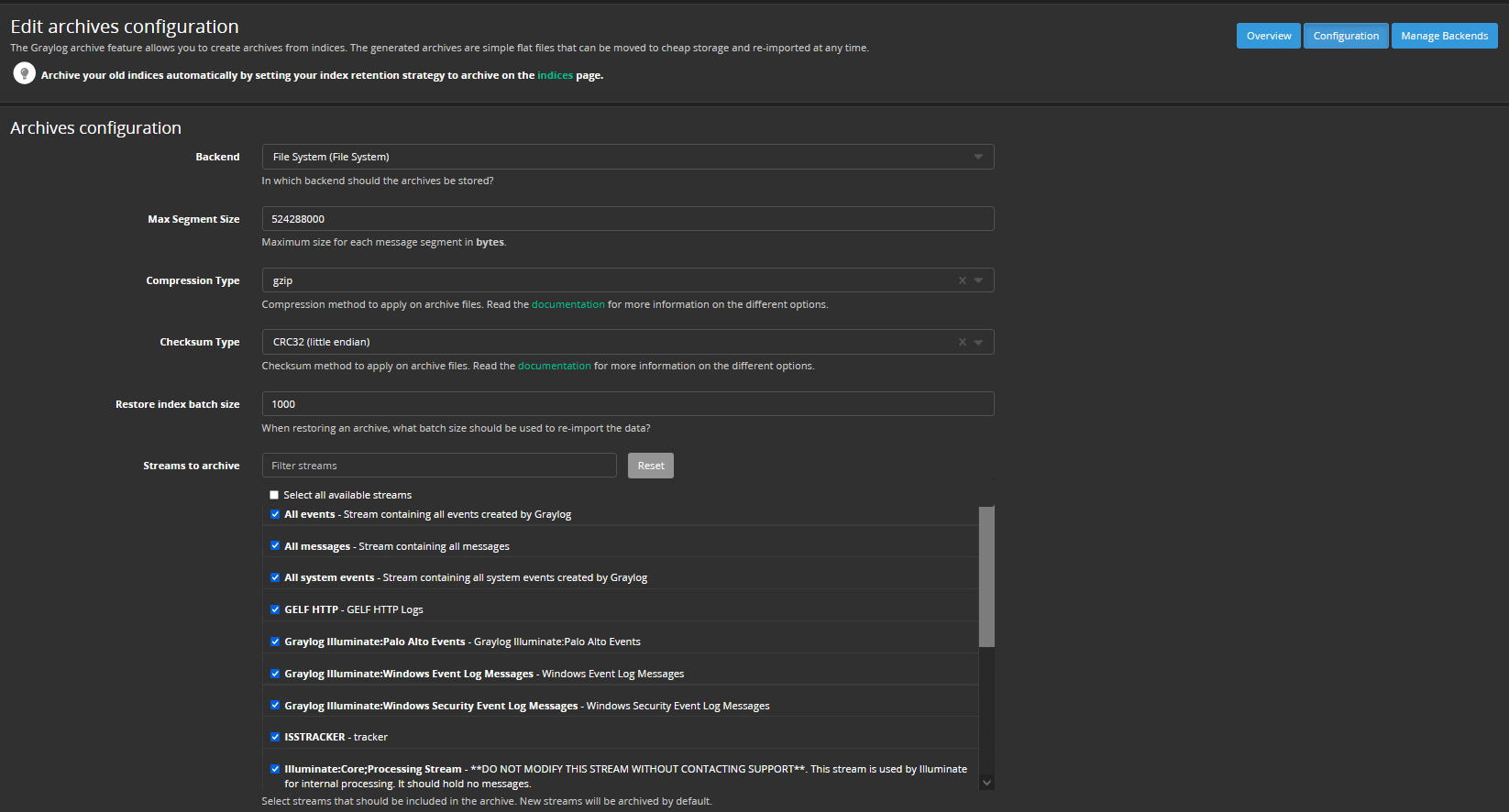

Additionally, Graylog supports log archiving. This means historical system logs, in addition to current system logs, can play a part in training AI.

In fact, that historical data may turn out to be even more valuable to the AI than the current data, because it can be correlated with problematic historical events (such as hardware failures, security breaches, service outages, and others) that followed the data chronologically.

An AI in development can therefore be tested by executing it against the historical data, which was archived and can be made available by Graylog, to see if it can successfully predict the problematic events that the organization knows for sure subsequently occurred. If the AI can in fact predict such events with suitable accuracy, it can usually also establish how much forewarning (measured in hours, days, or weeks) is typically generated.

Quantified predictions of this sort are a key test of AI efficacy and quality because an organization that is notified a problem will probably occur in two weeks can take remediating action immediately, preventing that problem from ever actually manifesting and reducing its business impact to zero.

Graylog’s comprehensive connectivity, speed of data ingestion, and archiving capabilities are therefore right on point for data scientists and software engineers chartered to create the best possible AI. While log data will still have to be cleaned and normalized to ensure AI can be trained properly, and that will usually require a custom process created by data scientists and software engineers, Graylog empowers the AI team to make sure that

1) There will be enough log data

2) The log data will be drawn from the correct and complete range of sources (spanning assets, technical domains, and business contexts)

3) The log data will include not just current data, but historical data

4) The log data will not only empower the team to create and train the AI, but also test the AI’s efficacy — well before the AI is actually rolled into production

5) The time required to create a release candidate of the AI substantially falls

6) The probability of the AI actually delivering its intended value substantially climbs

Thus, Graylog can play an invaluable role in creating successful AI — AI that generates actionable insights, notifies the organization in real-time of emerging problems, and in some cases, predicts those problems early enough that the organization can preclude them from ever manifesting at all.

Also important is that Graylog helps to foster AI success not just when AI is developed in-house, but when the organization has hired an external AI consultant or contractor.

Just as with in-house data scientists and engineers, external specialists will, in assessing your project requirements, typically want to begin with a large sample of your data. But unlike your own team, they shouldn’t be trusted with any more data than is necessary.

Graylog can help you ensure that AI consultants receive all the data they need and none of the data they don’t. Its straightforward interface makes it easy to deliver only the requisite logs, even when they span many different contexts, assets, users, and operational conditions, and it can typically do so in a matter of seconds, not hours or days. In most cases, in fact, Graylog doesn’t even require administrators to learn or use a dedicated query language; the bulk of searches, however complex, can be conducted right from the intuitive, browser-based GUI.

If you want to leverage the power of AI in your organization and are interested in learning how Graylog can improve your odds of success, feel free to reach out to us — we’re always glad to explore the possibilities and answer any relevant questions.