Businesses are generating vast amounts of data from various sources, including applications, servers, and networks. As the volume and complexity of this data continue to grow, it becomes increasingly challenging to manage and analyze it effectively. Centralized logging is a powerful solution to this problem, providing a single, unified location for collecting, storing, and analyzing log data from across an organization’s IT infrastructure.

While logging is an essential part of IT system management and monitoring, too much logging can create problems that impact system performance, security, and management.

What are the Potential Problems of Excessive Logging?

Increased storage requirements

Storing logs can consume significant amounts of storage resources, especially for large-scale systems generating a high volume of data. Too much logging can lead to storage capacity issues, resulting in slow system performance and potential data loss.

Performance degradation

Logging requires resources, including processing power, memory, and disk I/O. Excessive logging can overload system resources, leading to performance degradation and even system crashes.

Security risks

Logging sensitive information such as passwords, IP addresses, and other user data can create security risks if the logs are not adequately protected. Attackers can potentially exploit these logs to gain unauthorized access to the system or sensitive data.

Compliance issues

Logging can generate a vast amount of data that must be managed in accordance with industry regulations, such as HIPAA or GDPR. Too much logging can make it difficult to manage and report on compliance requirements, leading to potential legal and financial consequences.

Difficulty in identifying important logs

With too much logging, it can be difficult to identify and prioritize the critical logs that require attention. This can result in IT teams missing important alerts or not addressing critical issues in a timely manner.

How Can You Reduce Log Volume?

In this article I am going to walk through some ways we can reduce the volume of logs that we are collecting.

Source Filtering

You may be able to apply some filtering of messages directly from the log source. Many network devices such as firewalls will allow you to configure log forwarding filtering so that only the relevant messages are sent via Syslog or another protocol.

Log shipping agents such as Beats allow you to configure message filtering as part of the agent configuration. The config below is an example of a winlogbeat configuration that reduces the amount of noise being sent to the CLM:

winlogbeat.event_logs:

- name: Application

level: critical, error, warning

ignore_older: 48h

- name: Security

processors:

- drop_event.when.not.or:

- equals.event_id: 129

- equals.event_id: 141

- equals.event_id: 1102

- equals.event_id: 4648

- equals.event_id: 4657

- equals.event_id: 4688

- equals.event_id: 4697

- equals.event_id: 4698

- equals.event_id: 4720

- equals.event_id: 4738

- equals.event_id: 4767

- equals.event_id: 4728

- equals.event_id: 4732

- equals.event_id: 4634

- equals.event_id: 4735

- equals.event_id: 4740

- equals.event_id: 4756

level: critical, error, warning, information

ignore_older: 48hGraylog Sidecar is a great way to manage the configuration of log collectors such as Winglogbeat and Filebeat and maintain the filtering configurations that you’re applying at the agent level.

One of the big advantages of performing filtering at the source is that you are reducing the processing load on your Graylog instance as it doesn’t need to perform additional processing steps to remove these messages. Improvements can also be seen on network links if we are talking about a large log volume.

Grok Parse Those Logs!

Once the log message has reached Graylog there are several ways that the message can be parsed. If using a standardized format such as Syslog or GELF, then Graylog can handle a lot of the parsing work without much input. If you’re also using Graylog Illuminate content, then you also don’t need to worry about this.

However, for all the other occasions, we often require a Grok pattern to parse our logs, and this is where further filtering can be applied.

In Graylog, Grok patterns can be used with both extractors and processing pipelines to parse the log messages. The opportunity to reduce the log volume arises when we decide which fields are valuable and worth parsing, and which fields can be dropped.

If we take the example log message:

| 128.39.24.23 – – [25/Dec/2021:12:16:50 +0000] “GET /category/electronics HTTP/1.1″ 200 61 “/category/finance” “Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0)” |

The following Grok pattern could be used to extract fields from the message:

%{IP:ip} – – \[%{HTTPDATE:timestamp}\] “%{WORD:verb} %{DATA:request}” %{NUMBER:status} %{NUMBER:bytes}

“%{DATA:referrer}” %{DATA:user_agent}Result:

ip:128.39.24.23

timestamp:25/Dec/2021:12:16:50 +0000

verb: GET

request:/category/electronics HTTP/1.1

status: 200

referrer:/category/finance

user_agent:”Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0)”

Dropping Fields with Grok

If we determine that some of these fields are not useful and we wish to drop them, we can do this by modifying the Grok pattern to remove the field associated with the pattern.

%{IP:ip} – – \[%{HTTPDATE:timestamp}\] “%{WORD:verb} %{DATA:request}” %{NUMBER:status} %{NUMBER:bytes}

“%{DATA}” %{DATA}Result:

ip:128.39.24.23

timestamp:25/Dec/2021:12:16:50 +0000

verb: GET

request:/category/electronics HTTP/1.1

status: 200

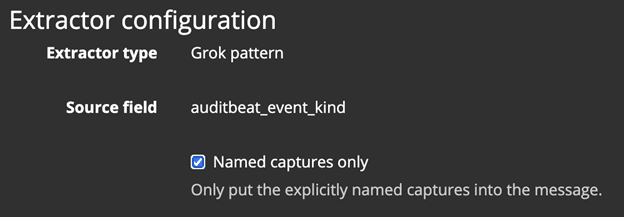

When using this technique within Graylog, ensure that you are specifying only_named_captures: true in your Pipeline function:

| grok(pattern, value, [only_named_captures]) |

Or select the relevant box in the Extractor configuration:

Pipelines

Pipelines are a powerful processing component of Graylog that enables fine-grained control of the processing applied to messages. Pipelines contain rules and can be connected to one or more streams.

The rules that make up pipelines are written using a simple programming notation combined with various built-in functions.

Dropping Messages and Fields

Two of the most useful functions for reducing the log volume are:

drop_message()

remove_field()

We’ll use the example of the fully parsed web server log message:

| 128.39.24.23 – – [25/Dec/2021:12:16:50 +0000] “GET /category/electronics HTTP/1.1″ 200 61 “/category/finance” “Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0)” |

ip:128.39.24.23

timestamp:25/Dec/2021:12:16:50 +0000

verb: GET

request:/category/electronics HTTP/1.1

status: 200

referrer:/category/finance

user_agent:”Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0)”

Drop Message Function

Looking at drop_message() first. Using a pipeline rule, we first need to identify the messages that we want to drop; in my example I’m looking to drop messages that contain the status code “200”. We then apply the drop_message() function..

| rule “drop_message” when contains(to_string($message.status), “200”) then drop_message(); end |

This will remove the message from the stream and reduce your log storage and licensing volume.

Removing Messages by Field Only

If we only wanted to remove specific fields from the message, instead we could use the remove_field() function.

Using the same log message in the Grok example:

| 128.39.24.23 – – [25/Dec/2021:12:16:50 +0000] “GET /category/electronics HTTP/1.1″ 200 61 “/category/finance” “Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0)” |

ip:128.39.24.23

timestamp:25/Dec/2021:12:16:50 +0000

verb: GET

request:/category/electronics HTTP/1.1

status: 200

referrer:/category/finance

user_agent:”Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0)”

First we select the message we want to modify, and then apply the remove_field() function to drop the relevant field(s):

| rule “remove_field” when has_field(“user_agent”) then remove_field(“user_agent”); end |

This will result in the following parsed message:

ip:128.39.24.23

timestamp:25/Dec/2021:12:16:50 +0000

verb: GET

request:/category/electronics HTTP/1.1

status: 200

referrer:/category/finance

Conclusion

In conclusion, managing logs is critical to maintaining an efficient and secure IT infrastructure. While logging is an essential part of IT system monitoring and management, too much logging can create problems that impact system performance, security, and management. This article has explored some ways to reduce the volume of logs being collected, such as source filtering, using Grok patterns to parse messages, and using Graylog pipelines. By implementing these techniques, organizations can ensure that they are collecting only the necessary logs, reducing storage requirements, improving system performance, and enhancing security. It is essential to strike a balance between collecting enough logs to monitor and troubleshoot issues and not collecting so many that it becomes overwhelming to manage and analyze them. Therefore, organizations must continuously evaluate their logging practices to ensure that they are optimized for their specific needs.